前言

在上文完成k8s集群搭建的基础上k8s(一)、1.9.0高可用集群本地离线部署记录,承接上文,本文简单介绍一下k8s对外暴露服务

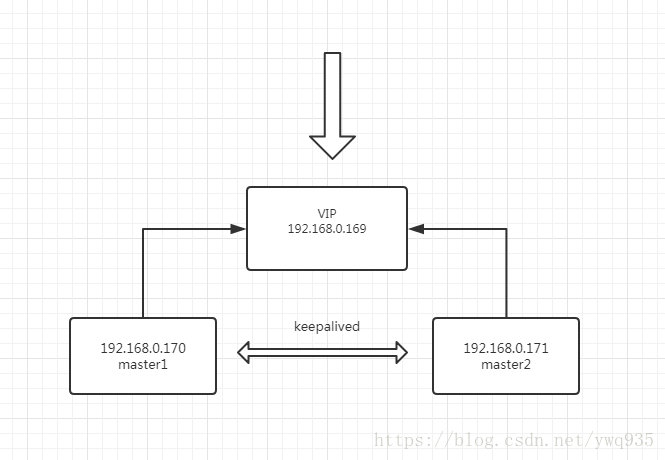

拓扑图:

一、k8s对外暴露服务方式介绍

1.Load Blance

目前已经有很多主流的公有云平台已经都提供基于k8s服务的组件,工作的原理本质上是k8s的服务向云平台申请一个负载均衡器来对外服务。因此这种方式只适合在云平台内部使用,这里略过.

2.Nodeport

针对某一个k8s service,k8s集群内的每一个node都将暴露node的一个指定接口,来为此service提供服务。此方式要求每一个node都提供一个端口,即使此node上没有承载有该service 的pod服务载体,因此此方式会带来一定资源的浪费和管理不便。

3.Ingress

Ingress注入方式,有三个组件,来协同完成对外服务的过程:

1.reverse proxy LB

2.ingress controller

3.k8s Ingress组件

1.reverse proxy LB

将服务请求反向代理至后端服务对应的node上,node收到后再由kube-proxy将请求转交给endpoint pod.

2.ingress controller

监控apiserver上的svc关联关系的变化,若svc关联发生变化(例如svc后端对应的pods发生变化),则动态地获取变化,更新前面反向代理的配置文件和热重载。

3.k8s ingress

k8s的一种资源类型,可以基于访问的虚拟主机、字段等进行区别路由映射到后端不同的k8s service上,ingress controller要实时监控每个ingress对象上指定的service来保证LB配置文件的热更新

在本文,采用traefik作为ingress工具演示。

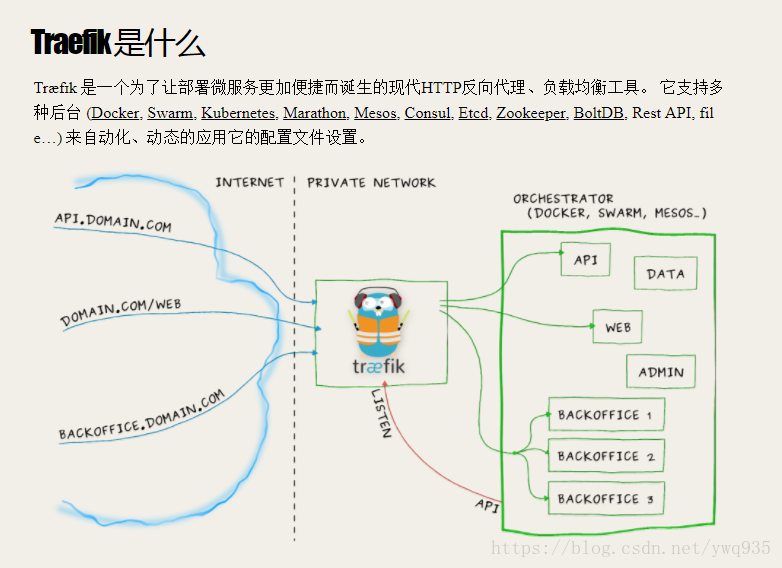

### traefik:

由于微服务架构以及 Docker 、 kubernetes 编排工具最近才开始流行,因此常用的反向代理服务器 nginx并未提供对k8s ingress的支持,所以Ingress Controller 这个中间件的存在就是用来做 kubernetes 和前端LB来做衔接的;Ingress Controller工作的方式是读取k8s ingress,通过与k8s api交互实时监控service,当serivce映射关系变化时,能重写 nginx 配置和热更新。traefik的出现简化了这个流程,traefik本身是一个轻量级的reverse proxy LB,并且它原生支持跟 kubernetes API 交互,感知后端变化,因此traefik可以取代上面的1、2组件,简化结构。

traefik官方介绍图:

二、服务部署

首先来部署一组简单的nginx应用容器。

准备好yaml文件:

1 | [root@171 nginx]# ls |

1 | [root@171 nginx]# cat nginx-deploy.yaml |

1 | [root@171 nginx] |

1 | [root@171 nginx] |

创建nginx的deploy、svc、ing资源,使用–record命令后面可以看到revision记录:

1 | kubectl create -f nginx-deploy.yaml --record |

过1分钟后查看状态:

1 | [root@171 nginx]# kubectl get pods -o wide | grep nginx |

在本地curl pod ip和service 的clusterIP测试:

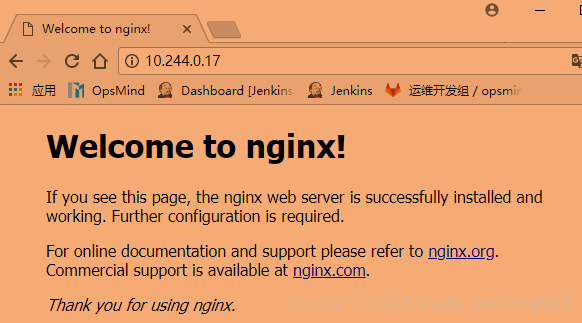

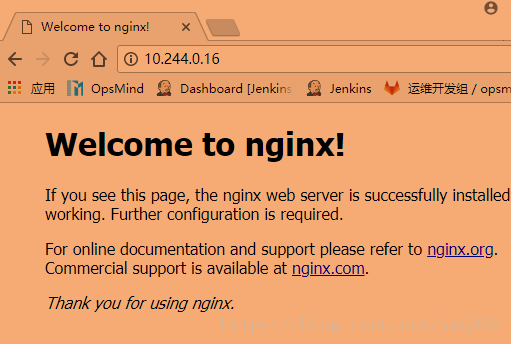

1 | [root@171 nginx]# curl http://10.244.0.16 |

nginx资源已经创建好了,但是目前只能在本地和集群内访问,集群外部无法访问,需要把网络路由打通

三、网络配置

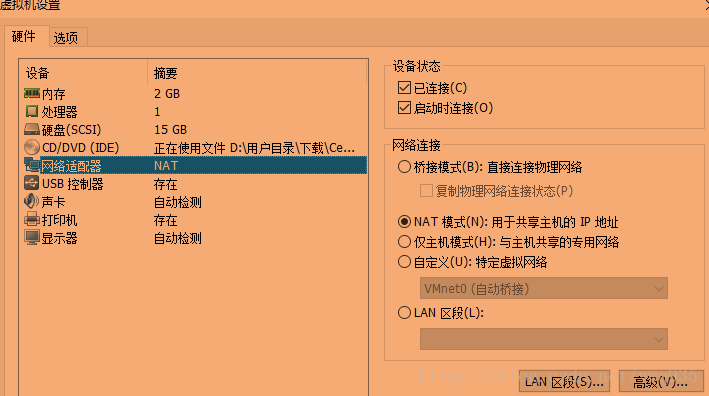

1.vmware网络配置:(无网络限制这一步可跳过)

在公司的电脑上搭的wmware虚拟机,公司网络上网认证限制外网,单人只允许使用单IP访问外网,因此虚拟机为了访问外网,使用的是NAT模式转接的物理机的网络,vmware NAT方式虚拟机通信配置:

首先,每台vm都要配置:

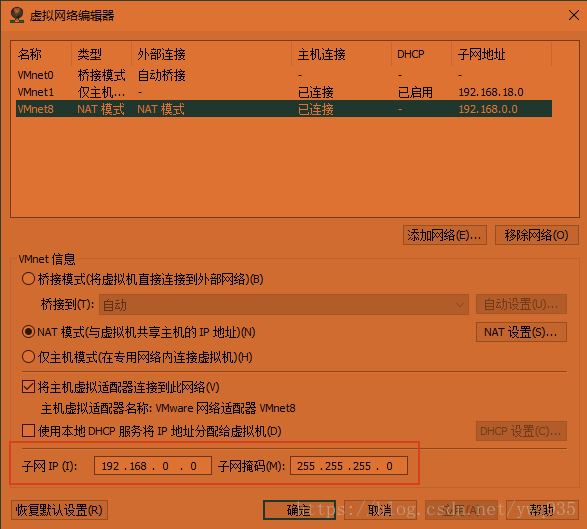

vmware–编辑–虚拟网络编辑器:

为了直接访问vm网段,在windows物理机上配置路由:

打开cmd,输入:

1 | route add 192.168.0.0 MASK 255.255.255.0 192.168.0.1 IF 9 |

IF是接口编号,可以使用route print查看自己电脑上vmnet8网卡对应的网卡接口编号

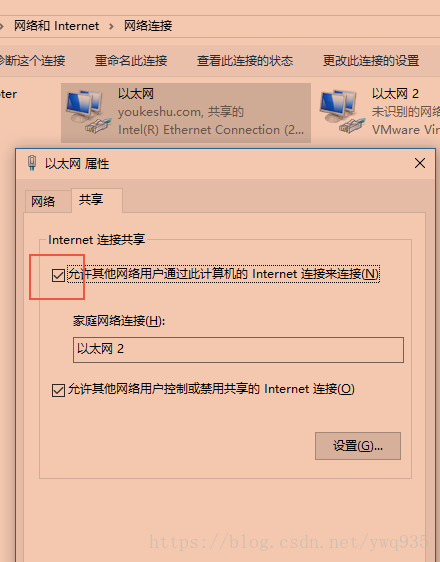

打开windows网络共享中心,找到物理网卡,右键–属性–共享,勾选开启网络连接共享(类似linux内核设置开启IP forward转发):

2.k8s服务网段、pods网段路由,dns配置:

步骤1是只是打通了外部到vm之间的网络,如果没有网络限制,vm跟外部在同一网段的可以跳过上面的步骤1。

步骤2是外部添加k8s的服务网段、pods网段:

windows cmd添加1

2route add 10.96.0.0 MASK 255.240.0.0 192.168.0.169 IF 9

route add 10.244.0.0 MASK 255.255.0.0 192.168.0.169 IF 9

windows添加dns记录:

C:\Windows\System32\drivers\etc\hosts打开编辑,添加dns记录:1

2192.168.0.169 test.nginx.com

192.168.0.169 ui.traefik.com

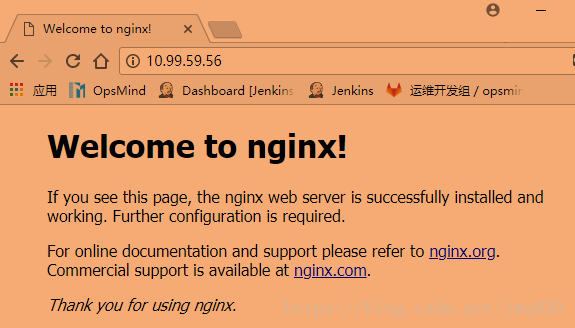

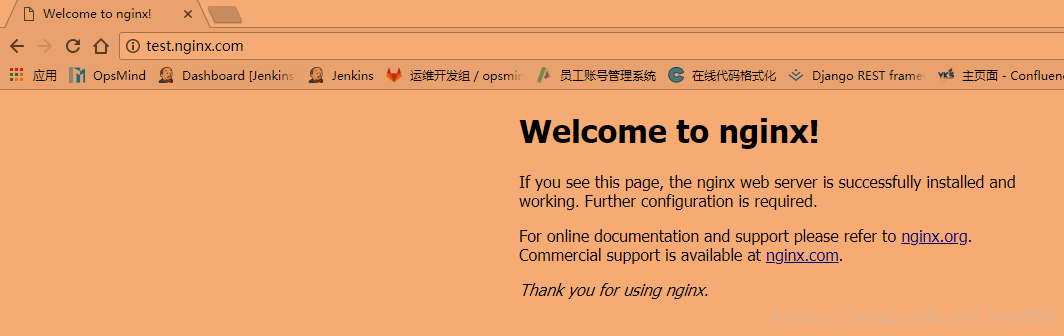

打开浏览器测试访问pod ip、服务的cluster ip:

可以直接访问!

下面开始部署traefik,通过虚拟主机名访问.

四、traefik部署

1 | [root@171 traefik]# ls |

创建资源:

1 | kubectl create -f traefik-rbac.yaml --record |

1分钟后查看创建情况:

1 | [root@171 traefik]# kubectl get pods -o wide -n kube-system| grep trae |

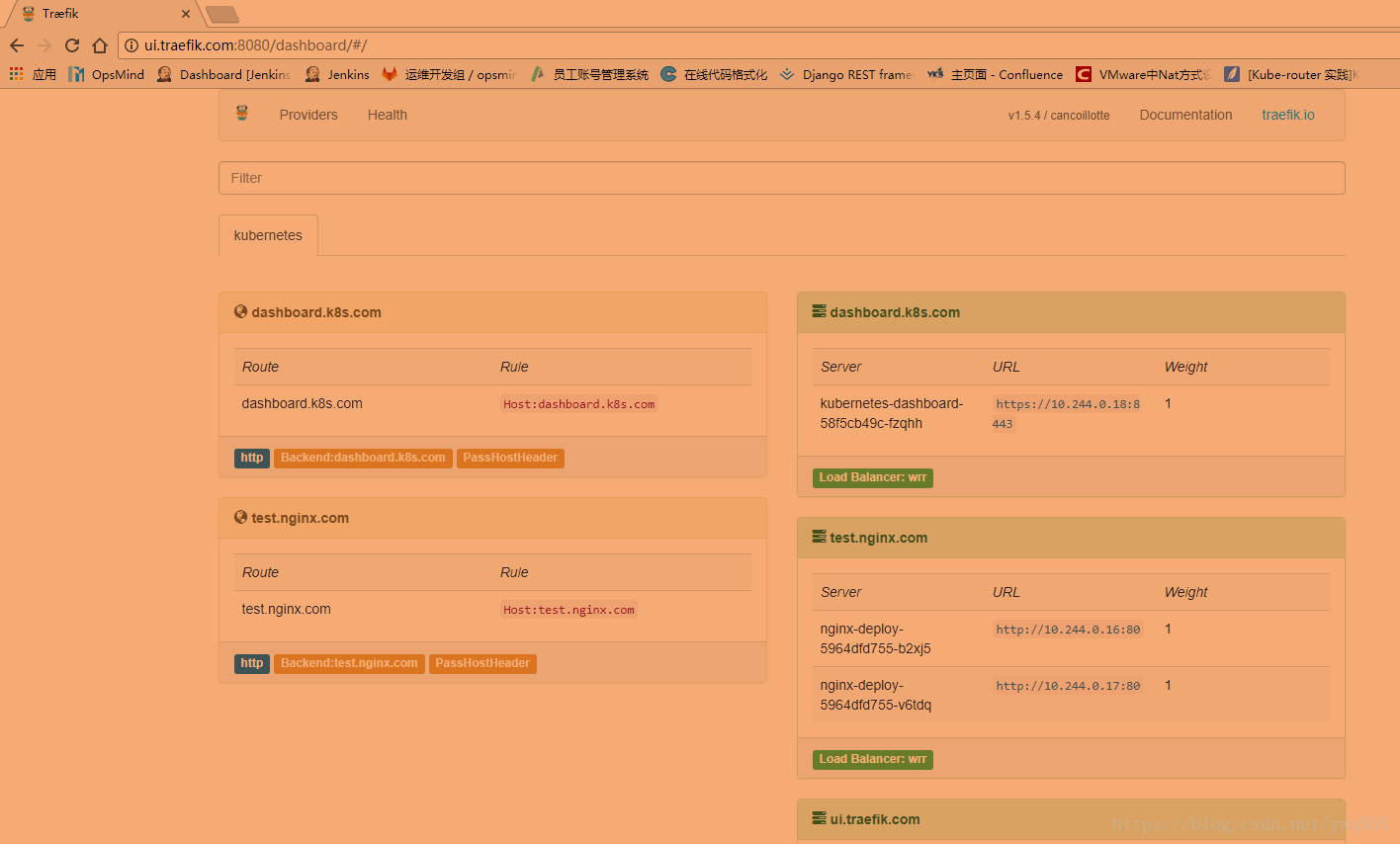

打开浏览器测试:

开启https

提前准备好证书和key:1

2root@h001238:~/traefik/ssl# ls

cert.crt cert.key

创建secret保存https证书:1

kubectl create secret generic traefik-cert --from-file=./cert.crt --from-file=./cert.key -n kube-system

编辑traefik配置文件:

1 | # vim traefik.toml |

创建ConfigMap:

1 | kubectl create configmap traefik-conf --from-file=traefik.toml -n kube-system |

修改DaemonSet部署文件,apply部署后直接删除原traefik pod进行配置更新.:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: traefik-ingress-controller

namespace: kube-system

labels:

k8s-app: traefik-ingress-lb

spec:

template:

metadata:

labels:

k8s-app: traefik-ingress-lb

name: traefik-ingress-lb

spec:

serviceAccountName: traefik-ingress-controller

terminationGracePeriodSeconds: 60

hostNetwork: true

volumes:

- name: ssl

secret:

secretName: traefik-cert

- name: config

configMap:

name: traefik-conf

containers:

- image: traefik

name: traefik-ingress-lb

volumeMounts:

- mountPath: "/opt/conf/k8s/ssl"

name: "ssl"

- mountPath: "/opt/conf/k8s/conf"

name: "config"

ports:

- name: http

containerPort: 80

hostPort: 80

- name: admin

containerPort: 8080

securityContext:

capabilities:

drop:

- ALL

add:

- NET_BIND_SERVICE

args:

- --api

- --kubernetes

- --logLevel=INFO

- --configFile=/opt/conf/k8s/conf/traefik.toml

查看更新后node上的端口监听:1

2

3

4root@h001238:~/traefik# ss -tnlp | grep trae

LISTEN 0 128 :::8080 :::* users:(("traefik",pid=18337,fd=6))

LISTEN 0 128 :::80 :::* users:(("traefik",pid=18337,fd=3))

LISTEN 0 128 :::443 :::* users:(("traefik",pid=18337,fd=5))

开启session sticky

Traefik支持session sticky, 仅需添加一些简单的配置。有两种方法,一种是在traefik端配置文件中修改,一种是在svc端添加注解。

第一种:

在traefik配置文件中顶格添加如下几行,为backend1这个后端开启session sticky。这种方式需要traefik频繁重载配置文件,不适用于实际场景,所以这里不作演示了:

1 | [] |

完整ConfigMap如下1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28apiVersion: v1

data:

traefik.toml: |

insecureSkipVerify = true

defaultEntryPoints = ["http","https"]

[entryPoints]

[entryPoints.http]

address = ":80"

entryPoint = "https"

[entryPoints.https]

address = ":443"

[entryPoints.https.tls]

[[entryPoints.https.tls.certificates]]

certFile = "/opt/conf/k8s/ssl/kokoerp.crt"

keyFile = "/opt/conf/k8s/ssl/214417797960145.key"

[metrics]

[metrics.prometheus]

entryPoint = "traefik"

buckets = [0.1,0.3,1.2,5.0]

[backends]

[backends.backend1]

# Enable sticky session

[backends.backend1.loadbalancer.stickiness]

kind: ConfigMap

metadata:

creationTimestamp: 2018-10-18T07:58:57Z

name: traefik-conf

namespace: kube-system

第二种:在需要添加session sticky功能的service资源上添加注解如下:

1 | annotations: |

完成添加注解配置后即可实现该svc绑定的ingress的域名的session sticky功能。

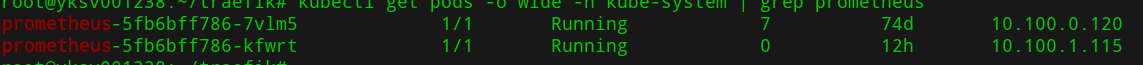

这里使用prometheus举例

将prometheus的deployment的replicas设置数量为2,生成两个pod后端实例:

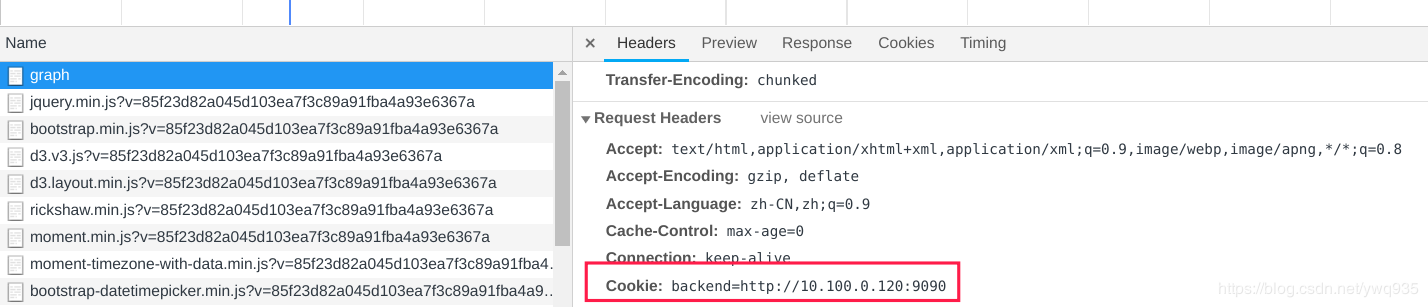

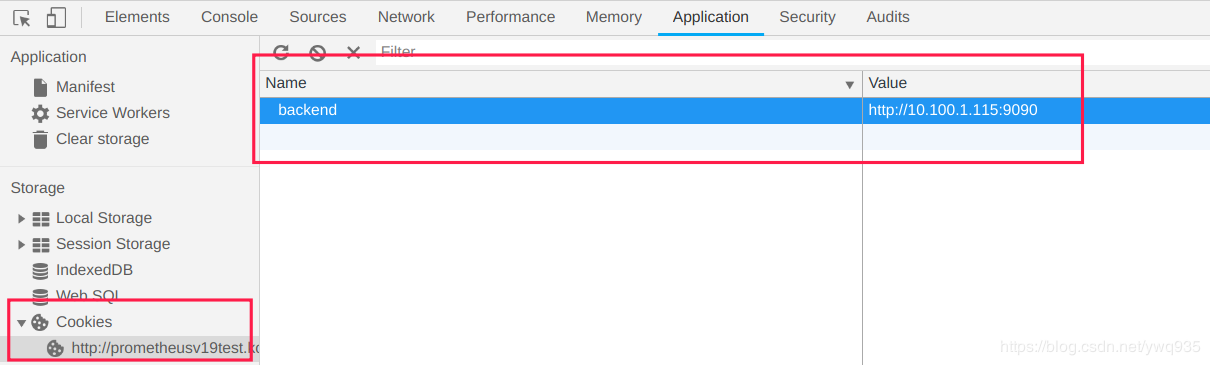

在prometheus的service上添加上方列出的annotation注解后,浏览器访问prometheus,即可看到traefik会为会话添加cookies,key为刚指定的值backend,value默认为后端pod实例:

重复刷新,backend值不会改变,手动清除此cookies后,再次刷新:

session sticky配置完成

更为详细的会话滞黏配置说明请参考官方文档:

配置文件

Service注解

11.10补充

prometheus + traefik 监控

traefik配置添加metric段:

1 | [metrics] |

更新configmap后重启traefik-ingress-lb容器.

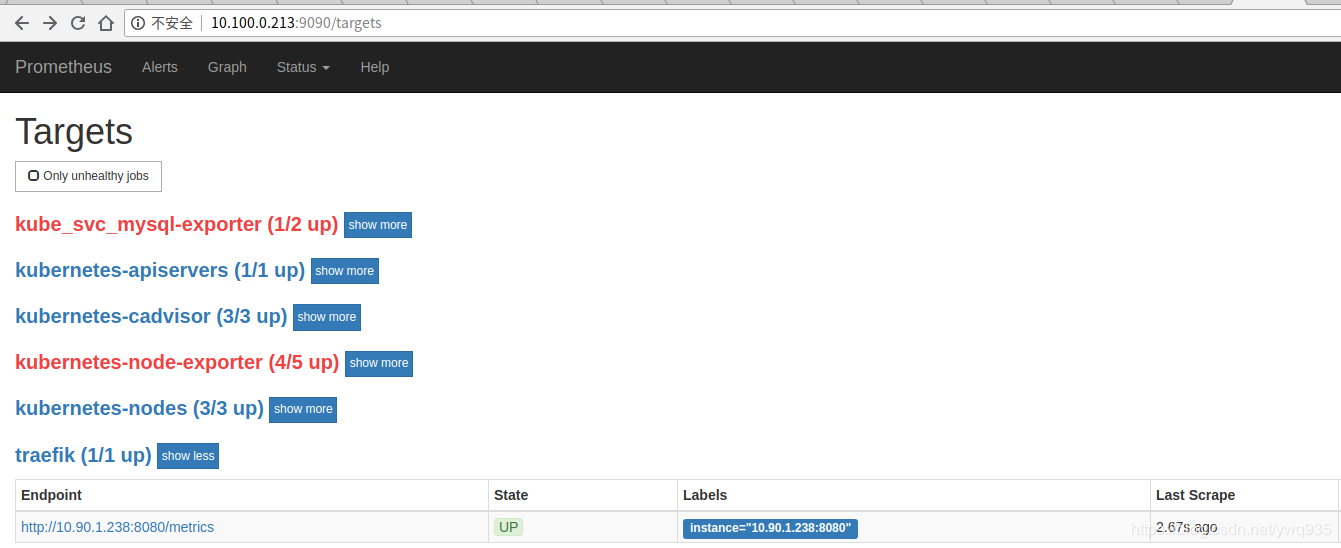

在prometheus中添加job:

1 | - job_name: 'traefik' |

更新configmap后重启prometheus容器.

在prometheus-target中可以看到新job的状态:

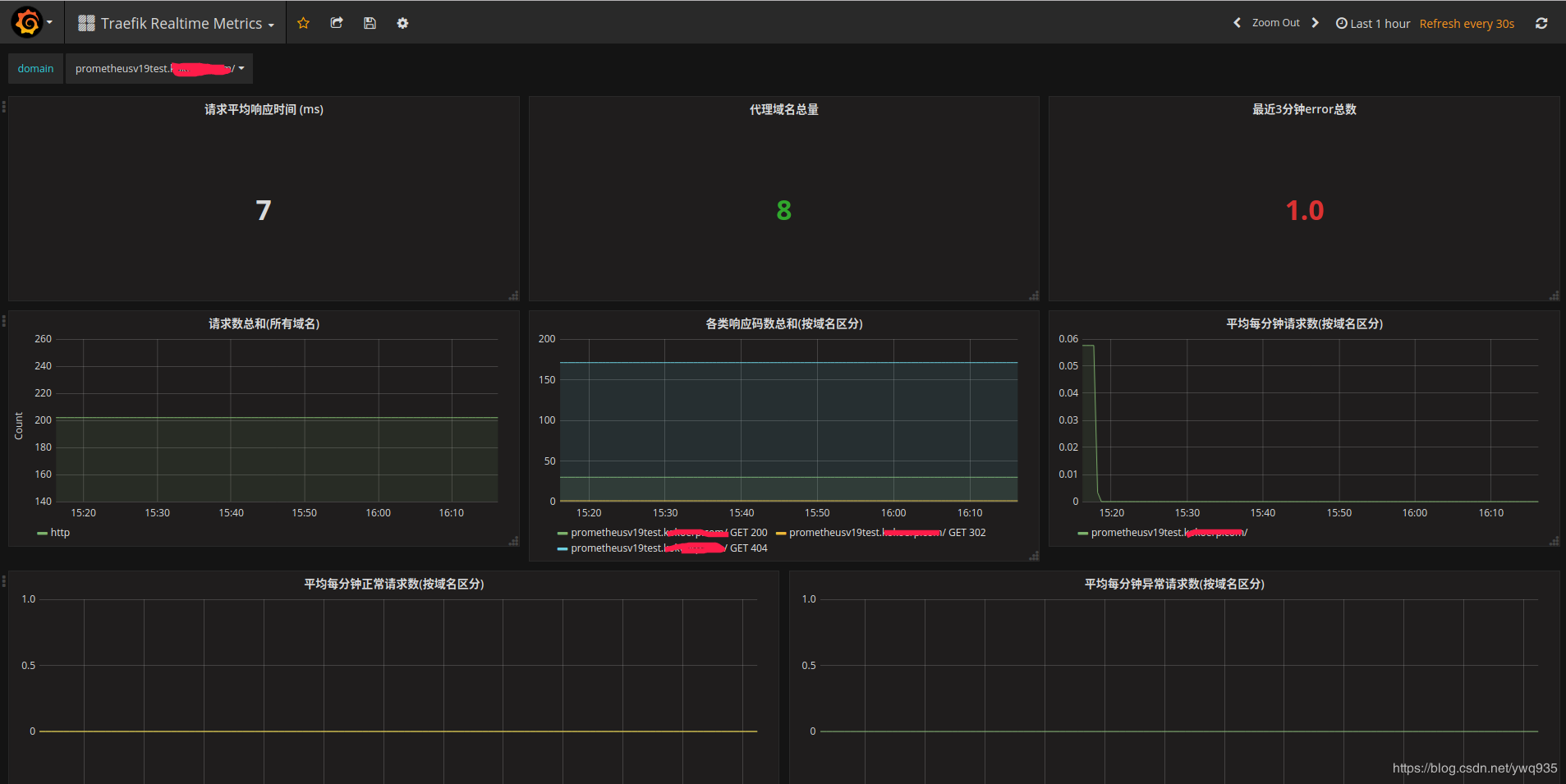

在grafana中导入id为2870的模板,新版本的traefik metrics名称发生了一些小变化,因此默认模板获取不到数据,需自行对照job target详情页修改一下模板,熟悉prometheus和grafana的话也可以进行一些自定义修改::

Prometheus/grafana相关知识及使用可以参考专栏内的其他文章

总结

k8s的各种功能组件、名词概念、资源类型(pod/svc/ds/rs/ingress/configmap/role….)、工作流程 等颇为复杂,理解起来不太容易,需要花时间阅读官方文档,因为k8s更新很快,有一些新功能或者即将淘汰的旧功能,官方的中文文档更新不太及时,建议直接阅读英文文档。

另外这里为了方便展示,用的windows查看效果,用linux curl工具测试是一样的,且网络的限制,使用的是vmware的NAT,需要多配置一步物理机网络到vm网络之间打通,再使用静态路由让k8s内部集群网络进行通信。有时间回家里电脑尝试下GNS3模拟路由器,搭建外部bgp,发布到集群bgp内部。