##

前言

上一篇讲到,k8s使用traefik代理集群内部服务,灵活地注入代理配置的方式,提供对外服务(参考:k8s(二)、对外服务)。在本篇,使用gns3思科模拟器IOU,搭建BGP网络环境,通过vmware桥接的方式,直接对接虚拟机内的k8s集群,与集群内部BGP网络实现互通。

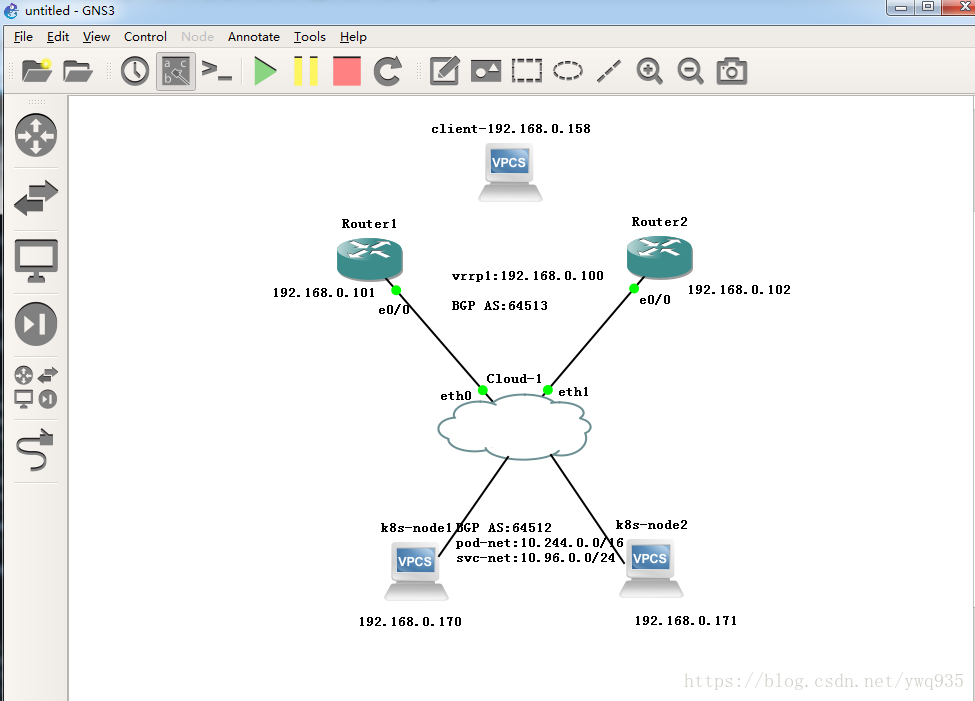

拓扑图:

1.路由器配置

-

1 | ####router1: |

2.kube-route配置:

k8s集群搭建步骤见k8s(一)、 1.9.0高可用集群本地离线部署记录

在此前配置好的kube-route的yaml配置文件中,需添加如下几项参数,分别指定本地bgp as,对等体as、ip等。

1 | - --advertise-cluster-ip=true #宣告集群IP |

完整的kube-router.yaml文件如下:

1 | apiVersion: v1 |

直接删除此前的kube-router配置,重新create这个yaml文件内的资源:

1 | kubectl delete -f kubeadm-kuberouter.yaml |

接着部署一个测试用得nginx的pod实例,yaml文件如下

1 | [root@171 nginx] |

查看podIP,本地curl测试:

1 | [root@171 nginx]# kubectl get pods -o wide |

3.检验结果

路由器查看BGP邻居:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18IOU1#show ip bgp summary

BGP router identifier 192.168.0.101, local AS number 64513

BGP table version is 19, main routing table version 19

4 network entries using 560 bytes of memory

6 path entries using 480 bytes of memory

2 multipath network entries and 4 multipath paths

1/1 BGP path/bestpath attribute entries using 144 bytes of memory

1 BGP AS-PATH entries using 24 bytes of memory

0 BGP route-map cache entries using 0 bytes of memory

0 BGP filter-list cache entries using 0 bytes of memory

BGP using 1208 total bytes of memory

BGP activity 5/1 prefixes, 12/6 paths, scan interval 60 secs

Neighbor V AS MsgRcvd MsgSent TblVer InQ OutQ Up/Down State/PfxRcd

192.168.0.102 4 64513 100 106 19 0 0 01:27:20 0

192.168.0.170 4 64512 107 86 19 0 0 00:37:19 3

192.168.0.171 4 64512 98 85 19 0 0 00:37:21 3

IOU1#

路由器查看BGP路由条目:

1 | IOU1#show ip route bgp |

可以看到,bgp邻居建立成功,k8s集群内部路由学习成功

开一台测试机检查:

测试机修改默认网关指向路由器,模拟外部网络环境中的一台普通pc:

1 | [root@python ~]# ip route |

测试成功,至此,集群内部bgp网络,和外部bgp网络对接成功!

友情提示:别忘了保存路由器的配置到nvram里(copy running-config startup-config),否则重启就丢配置啦。好久没碰网络设备了,这个茬给忘了,被坑了一次,嘿嘿

9-22踩坑

今日尝试在k8s集群中添加与原集群node(192.168.9.x/24)不在同一个网段的新node(192.168.20.x/24),创建好了之后出现了非常奇怪的现象:新node中的kube-router与集群外的网络核心设备之间建立的peer邻居关系一直重复地处于established —> idle状态频繁转变,非常的不稳定,当处于established状态时,新node与原node间的丢包率甚至达到70%以上,处于idle状态时,node间ping包正常

问题截图:

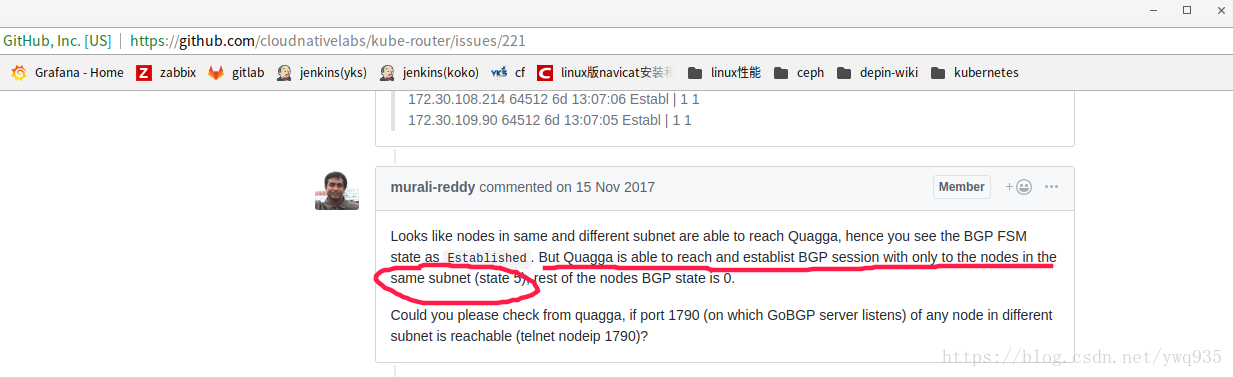

在进入kube-router后使用gobgp neighbor查看发现新node与外部网络设备ebgp\原node的ibgp邻居关系一直处于频繁变化的状态无法稳定建立关系,百思不得其解,最后终于在github上找到了kube-router唯一的类似issue,项目成员解释如下:

Github issue链接

个人理解是:

kube-router只支持与同一个子网的node建立ibgp对等体关系,跨子网的节点无法建立对等体邻居关系;同时,ebgp协议的ebgp max hop属性,默认值为1,路由器设备一般支持手动修改此值,而kube-router较早之前的版本仅支持默认值1,无法手动配置此值,因此EBGP邻居与kube-router也必须在同一个子网中,后面的版本已解决此问题,升级版本后node跨子网建ebgp邻居不再有问题。